TransMIXR – Immersive AR and VR Platform

While the future of media experiences still has to be written, the eXtended Reality (XR) and Artificial Intelligence (AI) technologies of the TransMIXR project provide a unique window of opportunity for the European Creative and Cultural Sector (CCS) to reimagine digital co-creation, interaction and engagement possibilities in immersive Augmented Reality (AR) and Virtual Reality (VR) environments.

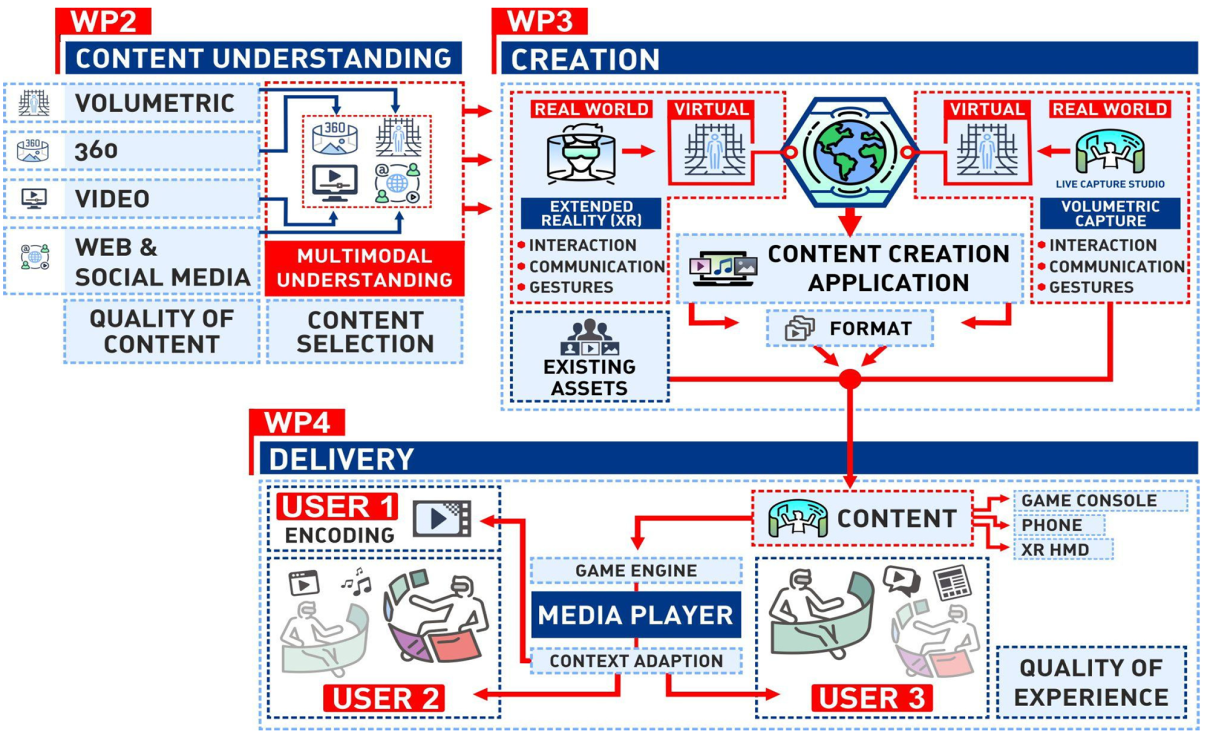

The TransMIXR platform will provide (i) a distributed XR Creation Environment that supports remote collaboration and concurrent application scenarios of the Storypact editor, and (ii) XR Media Experience Environment for the delivery and consumption of immersive media experiences. Ground-breaking AI techniques for the understanding and processing of complex media content will enable the reuse of heterogeneous assets across immersive content delivery platforms.

Immersive TransMIXR Plattform for AR/VR Content Creation and Delivery

Using the Living Labs methodology, TransMIXR will develop and evaluate four pilots that bring the vision of future media experiences to life across multiple CCS domains: (i) news media and broadcasting, (ii) performing arts, and (iii) cultural heritage. Additionally, the project will harness the creativity of the CCS and will forge interdisciplinary collaborations to demonstrate how immersive social experiences could be transferred to new application areas beyond the CCS. The project aims to contribute to the Media and Audiovisual Action Plan from the European Commission, in particular boosting the adoption of XR technologies, opening new business models opportunities in new application areas and markets, and gaining worldwide leadership in XR technologies while being deeply grounded in European values such as veracity, diversity, connectedness and universality.

Interactive Visualization for AR and VR Environments

webLyzard will conceptualize and implement algorithms for computing content metrics and develop an interactive visual analytics dashboard to monitor these metrics. Advanced content exploration and visualization capabilities will seamlessly integrate the content assets previously ingested, the metadata and the visualization methods. Specific widgets will be selected and customized. Interactive tooltips to inspect metadata and trigger drill-down operations will enable a thorough evaluation of the ingested content in terms of completeness and quality of (meta)data assets for the pilots.

XRaaS-Engine for Impact Metrics

The webLyzard Stakeholder Dialogue and Opinion Model, or WYSDOM, is a real-time impact and communication success metric based on AI-enabled opinion mining methods initially developed for the U.S. Department of Commerce. The Horizon 2020 Project ReTV significantly extended the underlying model to provide seamless integration of content and audience metrics. TransMIXR will deliver interactive visualizations of these metrics through an XR-as-a-Service (XRaaS) Engine. The resulting AR/VR environment will illustrate how perceptions evolve over time and how biases (gender, politics, etc.) impact these perceptions.

TransMIXR Project Consortium

Coordinated by the Technological University of the Shannon – Midlands Midwest, the TransMIXR consortium with 19 organizations from 12 European countries includes (i) leading expertise in European research, media and innovation programs, (ii) in-depth knowledge of AI & XR and their application to the media sector, and (iii) representatives of key European application domains including News Media, Cultural/Education and Creative.

This project has received funding from the European Union’s Horizon Europe research and innovation program under grant agreement No 101070109. Call: HORIZON-CL4-2021-HUMAN-01-06, Innovation for Media, including eXtended Reality (IA).

This project has received funding from the European Union’s Horizon Europe research and innovation program under grant agreement No 101070109. Call: HORIZON-CL4-2021-HUMAN-01-06, Innovation for Media, including eXtended Reality (IA).